The Innovation Spectrum: What Two AI Cases Reveal About the Rules We’re Missing

WRITTEN BY: MARYAMA ELMI, JD

From grassroots breakthroughs to corporate IP battles, Masakhane and Getty Images reveal how current governance systems struggle to handle the speed, structure, and stakes of AI innovation.

This is the third article in the Owning Innovation series, which explores the evolving relationship between AI, intellectual property, and commercialization. Where the previous piece examined artistic mimicry and aesthetic appropriation, this one zooms out to examine innovation itself. How it is created, shared, and scaled, and what happens when the systems meant to govern it can’t keep up.

Introduction

AI is being developed all over the world. From elite labs and tech companies, to volunteer collectives, student-led projects, and open-source communities. But while AI innovation is becoming more widespread, the systems that govern how it is recognized, protected, and shared have not kept up.

This is a problem of innovation governance. This is the set of laws, policies, norms, and institutions that shape how innovation happens, who contributes, who benefits, and what values guide that process (Really Good Innovation). It includes intellectual property (IP) systems, research funding, commercialization pathways, and institutional incentives; all of which influence whose work is protected, recognized, or left behind. At its best, innovation governance can encourage broad impact, give credit where credit is due, and help turn research into public value. At its worst, it can exclude, extract, or ignore.

Meanwhile, AI governance — the rules and practices that guide how AI is developed, used, and managed (IAPP) — does not fill the gap either. While the field is growing, it tends to focus more on ethics, safety, and risk mitigation. Questions of ownership, attribution, and fair compensation often fall outside the scope of current frameworks.

Today’s AI development moves faster than the rules designed to govern it. These frameworks were built for slower, more linear innovation, and not necessarily for complex, data-driven systems that evolve in real time.

This article examines that disconnect through two real-world case studies:

Masakhane: a decentralized network of African researchers building open-source language tools for underrepresented communities, and

Stability AI: a generative AI company operating at scale, whose use of copyrighted data to train models has raised global questions about legality, ethics, and ownership.

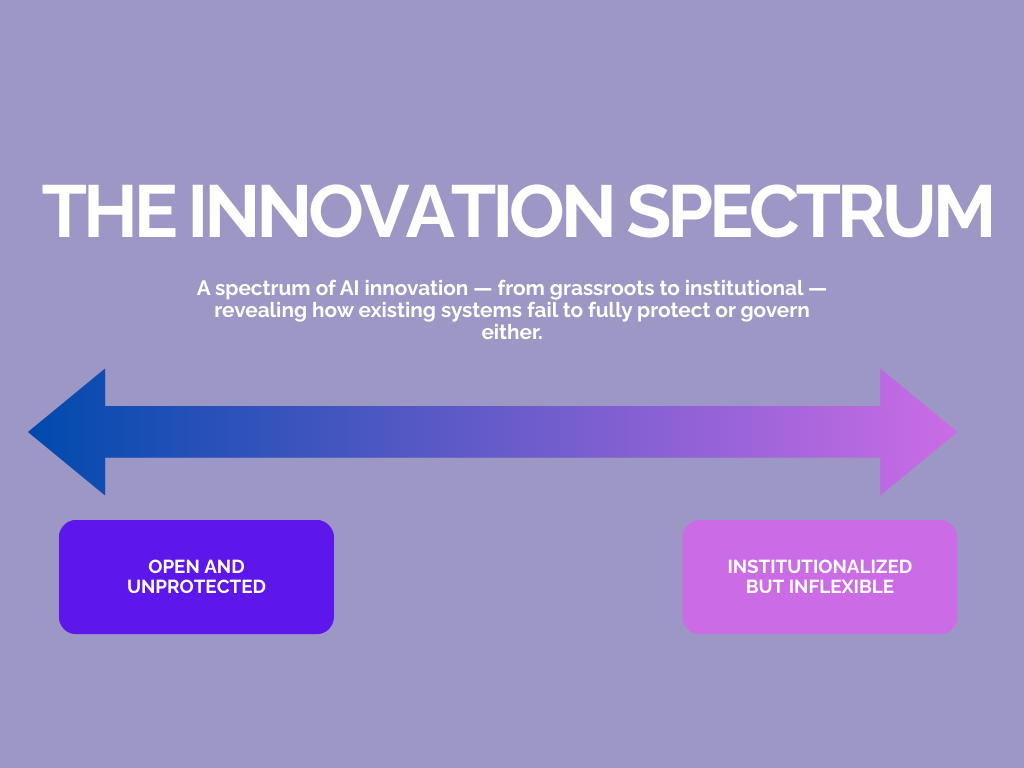

These case studies sit on opposite ends of what we coin as the Innovation Spectrum. They are opposites not just in how they operate, but in how they are governed. However, they both reveal the same flaw. We are relying on outdated systems to manage a new kind of innovation. And that misalignment is becoming more and more difficult to ignore.

The Innovation Spectrum

Masakhane and Stability AI sit at opposite ends of the innovation spectrum. This is because Masakhane is a grassroots, open-source community focused on accessibility and collective progress, yet it operates without the formal protections that would safeguard its contributions from being exploited.

Stability AI, by contrast, operates within a highly formalized governance environment. But this structure, while protective, reveals a different problem: it is not built to adapt. It struggles to account for the fluid and complex nature of AI development today.

Masakhane: Innovation Without Infrastructure

Let’s start at one end of the innovation spectrum.

Masakhane is a decentralized network of African researchers, students, and technologists building open-source tools for African languages. These tools uses AI to understand, translate, and interact with human language in ways that reflect real linguistic diversity. Their work fills a crucial gap in global AI development. But it exists almost entirely outside the structures that typically protect or reward innovation.

Masakhane’s tools are open source, meaning the code is shared publicly so anyone can use, adapt, or build on it. This approach encourages collaboration and accessibility, but it also comes with some significant trade-offs. Without formal licensing, intellectual property protections, or commercialization pathways, their work can be reused without permission, credit, or compensation. In other words, there is no institutional backing to enforce ownership or ensure that benefits flow back to the communities that contributed the data and labor.

A 2024 report by the Carnegie Endowment for International Peace unpacks why this matters. The report highlights how open datasets from African NLP initiatives (including Masakhane) have already been reused in commercial tools, often without attribution or benefit-sharing. In some cases, these tools have even been sold back to the very communities that contributed the underlying data.

This is a clear example of extraction. In this context, extraction doesn’t necessarily mean something illegal or overtly exploitative. Rather, it refers to the process by which value is taken from a community without recognition, compensation, or any benefits flowing back to the original contributors (Horst et al., 2024). There is no legal obligation to credit or pay open-source contributors, and no governance mechanisms in place to ensure fair outcomes. The result is a familiar pattern: labor and knowledge from the Global South power innovation and profit in the Global North, while the original communities remain unrecognized and unrewarded (Horst et al., 2024).

As the Carnegie report puts it, “African NLP communities are innovating within a governance vacuum,” where neither local IP regimes nor international frameworks offer meaningful protection. In other words, open collaboration has enabled real progress, but it has also made that progress extractable.

This is not just an intellectual property issue. It’s a deeper failure of innovation governance. There is a lack of systems that recognize and support distributed, community-led innovation. Masakhane’s model is collective, public-interest-driven, and rooted in local context. But the global structures that govern AI innovation (i.e., IP law, research funding, and policy frameworks), were designed around centralized institutions in the Global North. These systems expect formal ownership, commercial scalability, and institutional control. When innovation doesn’t fit that mold, it often goes unrecognized and unprotected.

This gap extends into AI governance as well. While much of the conversation focuses on managing harms in bias, safety, and transparency (to name a few), Masakhane reveals the need for governance frameworks that also enable innovation. That means creating space for equitable participation, rewarding public-good contributions, and preventing the quiet extraction of value from open communities.

Masakhane is not alone. Across the Global South, community-led innovation often exists outside the scope of both innovation governance and AI governance. These frameworks rarely account for collective, purpose-driven work. As a result, these contributions are often overlooked, undervalued, or left unprotected by the very systems meant to support innovation.

Getty Images vs. Stability AI: Innovation With Power, But Without Boundaries

At the other end of the spectrum is Stability AI, the private company behind Stable Diffusion, one of the most widely used generative image models. Stability AI was sued by Getty Images in both the US and the UK for allegedly infringing copyright. The lawsuits claim that Stability AI scraped Getty’s content to train its model without permission, and that the outputs of the model generate synthetic images that substantially reproduce Getty’s copyrighted works, including visible brand markings (Pinsent Masons).

Stability AI operates in a highly formalized governance setting. It is well-funded, backed by institutional investors, and subject to intellectual property laws, regulatory institutions, and judicial systems in jurisdictions like the UK and US. But even within this structure, the current frameworks are failing to keep up. This case is not just about intellectual property. It exposes deeper failures in innovation governance. The legal and institutional systems responsible for managing the risks, rights, and responsibilities of emerging technologies were never built for models that learn from billions of data points and generate synthetic content at scale.

Additionally, core principles of AI governance, such as transparency, accountability, and fairness, are being tested in real time. Stability has admitted that “at least some” Getty images were used in training, but has not identified which ones. This lack of disclosure raises serious questions about how we govern AI systems that operate as black boxes (Charles Russell Speechlys).

The legal system is also struggling to manage the sheer volume of data involved. In pre-trial proceedings, the UK High Court noted that identifying which works were used in training would be “wholly disproportionate and practically impossible without significant resources” (Charles Russell Speechlys, 2025). This isn’t just a copyright enforcement challenge, it also reveals how the governance tools we rely on for innovation oversight are overwhelmed by the pace and scale of generative AI development.

What this case ultimately reveals is a governance vacuum. Stability AI is moving fast in an environment where laws exist, but don’t yet offer clarity on how to handle AI-generated content, how to compensate creators, or how to define ownership. In that gap, companies set their own standards, and the systems meant to guide them are left playing catch-up.

Same Problem, Different Ends of the Spectrum

At first glance, Masakhane and Stability AI could not be more different. One is a grassroots, community-led effort built on openness and public good. The other is a well-funded private company, backed by venture capital and operating within formal legal and commercial frameworks.

But despite these differences, both sit within governance systems that are failing them.

Masakhane creates value in the open, but lacks the infrastructure to protect it. Its tools can be reused, rebranded, and resold without acknowledgment or benefit to the community. Stability AI, meanwhile, operates within a formal system, but one that wasn’t designed for models trained on massive, mixed-source datasets. That ambiguity lets them push the boundaries of what’s allowed.

Both stories point to the same blind spot in our governance landscape. The diagram below helps show where that gap lies.

This visual illustrates the core issue. Most AI innovation today lives in the messy middle — a space between two governance regimes.

As the diagram illustrates, this messy middle is where new approaches are most urgently needed. Innovation governance has traditionally focused on protecting and commercializing inventions. This includes using tools like patents, IP policies, and tech transfer offices to define ownership after something has been created, usually within institutions. In contrast, AI governance focuses on managing risk by ensuring fairness, transparency, privacy, and ethical deployment.

But AI innovation increasingly lives in between. This is because AI innovation is built on shared data, developed collaboratively across borders, and scaled through commercial platforms. This is where our current frameworks break down, and where both Masakhane and Stability AI reveal the cracks. Whether the innovation is grassroots or venture-backed, our systems are out of sync with how AI is actually built today.

A Path Forward

The key takeaway from the two case studies is that our current systems are not only outdated, they are fundamentally misaligned with how AI innovation actually works. However, this article does not suggest that we have to start from scratch. Rather, we do need to build real bridges between innovation governance and AI governance. That means:

Licensing models that recognize and respect open-source and collective contributions

Open-source AI relies heavily on community-driven datasets and models, but current licenses aren’t designed to capture that complexity.

The Linux Foundation and Open Source Initiative both emphasize the need for licensing approaches that account for data, not just code.

Attribution that acknowledges everyone who contributes — not just those with titles or trademarks

IP systems that reflect how AI is developed today: collaboratively, iteratively, and often across jurisdictions

Traditional IP regimes assume clear ownership, but AI systems are trained on mixed-source datasets with unclear boundaries.

The OECD and Linux Foundation both call for updated systems that reflect how AI is developed in practice.

Governance that expands beyond protection to assess equity, inclusiveness, and public value

Governance frameworks should go beyond compliance to consider justice, equity, and inclusion.

The UNESCO Recommendation on AI Ethics and the Ada Lovelace Institute both advocate for centering public interest and community participation in the future of AI governance.

Institutions, funders, and policymakers all have a role to play in redesigning systems that recognize the real pathways of AI development. The future of AI will be shaped not just by what we build, but by the frameworks we build around it. These frameworks should recognize collaboration, reward integrity, and balance progress with accountability. If we want to shape a future where AI works for everyone, we need systems that work for the full spectrum of innovation.

References

Ada Lovelace Institute. (2023). Participatory data stewardship: A framework for involving people in the governance of data use. https://www.adalovelaceinstitute.org/report/participatory-data-stewardship/

Carnegie Endowment for International Peace. (2024). AI and Data Justice in the Global South: African NLP as a Case Study. https://carnegieendowment.org/2024/03/05/ai-and-data-justice-in-global-south-pub-91988

Charles Russell Speechlys. (2024). UK court finds Stability AI’s copyright infringement case can proceed. https://www.charlesrussellspeechlys.com/en/news-and-insights/insights/intellectual-property/2024/stability-ai-copyright-infringement-case/

Horst, H. A., Sargent, A., & Gaspard, Luke. (2024). Beyond extraction: Data strategies from the Global South. New Media & Society, 26(3), 1366–1383. https://doi.org/10.1177/14614448231201651

IAPP (International Association of Privacy Professionals). (n.d.). What is AI governance? https://iapp.org/news/a/a-primer-on-artificial-intelligence-governance/

Linux Foundation. (2022). CDLA 2.0: Enabling Easier Collaboration on Open Data for AI and ML. https://www.linuxfoundation.org/press-release/enabling-easier-collaboration-on-open-data-for-ai-and-ml-with-cdla-permissive-2-0/

OECD. (2021). Data governance in the digital age: Enabling data-driven innovation while protecting rights. https://www.oecd.org/digital/data-governance.htm

Open Source Initiative. (n.d.). Community Data License Agreement (CDLA). https://cdla.dev/

Pinsent Masons. (2023). Getty Images lawsuit claims Stability AI copied 12 million photos. https://www.pinsentmasons.com/out-law/news/getty-images-lawsuit-claims-stability-ai-copied-12-million-photos

Really Good Innovation. (n.d.). What is innovation governance? https://www.reallygoodinnovation.com/innovation-governance

UNESCO. (2021). Recommendation on the Ethics of Artificial Intelligence. https://unesdoc.unesco.org/ark:/48223/pf0000381137

USPTO (United States Patent and Trademark Office). (2023). Request for comments on AI and inventorship. https://www.federalregister.gov/documents/2023/02/14/2023-03147/request-for-comments-on-artificial-intelligence-and-inventorship