Welcome Back, Galatea

WRITTEN BY: CAMILLA BALBIS, M.G.A

Why AI companions are here to stay, and why not everyone should get to play with them

If you’re still lonely in 2025, it’s really a you problem. Or at least this is what the tech industry seems to be implying. Anyone keeping an eye on what’s happening in the large, looming, and often lavishly dystopian field we call “AI” may have noticed that we fixed loneliness for everyone, rejoice! If you missed it, it’s fine. After all, it’s all so fast, all the time, so you’d be forgiven for lumping this in with the usual churn of tech fads.

For the (blissfully) uninitiated, we’re talking about AI companions, meaning a category of artificial intelligence systems purposely made to interact with users as humans would, acting as friends or usually romantic partners. There’s thousands of products like this on the market, but they usually follow the same formula: you the user get to personalize who you want to talk to (meaning their personality and tone, but in many cases you can also pick their complete appearance) and then get to talk with them. Easy peasy.

These interactions are usually text based, but new features now allow to talk with them like you would through a call, and it is safe to assume that it will always be easier and more “realistic”, And perhaps you’re the kind of person who would argue the realism of someone always being at your beck and call and happy to answer, but that’s not what’s being sold here. We’re in the business of wish fulfillment, something as old as time.

Sure, the medium might have changed, but ultimately this particular flavor of entertainment isn’t new (you could call it ancestral). However, in this new iteration, it poses a new question: is this for everyone? Because with AI companions so proficient in the performance of romance and (often graphic) intimacy, figuring out where minors with in this equation becomes a priority.

When we say “as old as time” we mean at least 2000 years old. You’ve probably heard the myth of Galatea. Pygmalion was a sculptor who carved his perfect lover, Galatea. The statue is exquisite, but it is just that, a statue. So, he, desperate, prays to the goddess Aphrodite, who takes pity and breathes life into the marble. Galatea awakens, flawless and compliant, and the sculptor gets his happy ending. (If you’re curious about Galatea’s point of view, go read Madeline Miller’s Galatea).

The point is: the desire to mold a partner, custom-made, ideologically compatible, possibly adoring on command, is, for better or for worse, deeply human. We’ve re-skinned the myth a thousand times, and today’s skin has a subscription price and, despite not having a body of its own (yet), it’s the most convincing it’s ever been.

Choose your character

Outside of science fiction or movies like Her, the first real, commercial iteration of virtual companions arguably came with Replika in 2017. The company offered a text-first “best friend/partner” that morphed over time from grief-bot to romance role-play service (with profound effects on the most attached users). At its peak, it hit 2.5M monthly active users and has accumulated 30M+ total users to date.

Then came Character.AI (2022). Different tone and scope, the breakout Gen-Z playground, millions of “characters,” from pop-culture cosplays to flirty originals for users to talk to about anything. There are, supposedly, content blocks in place for graphically explicit sexual content, but everything else is on the table. Scale-wise, you’re looking at 20-22M monthly active users, and a user base that is very young, majority 18-24.

Now, Grok’s “Ani” xAI’s goth-anime girl “companion” inside Grok leans deliberately provocative, almost comedically flirtatious, if it weren’t so undeterred by the user’s age. This is openly positioned as a grown-up product, but it exists on mainstream rails (X/iOS/Android) where age verification is easy to bypass.

And finally, the dark horse in the race: Sam Altman declared that starting December 2025, age-verified adults will be able to generate erotica and run adult conversations on ChatGPT. We’re at an interesting crossroads, seeing how the “AI Companions I Can Sext With” category just moved from “niche app” to “default platform feature.” This is a massive shift for ChatGPT, considering as a platform, it is arguably the most ubiquitous entry point into AI.

The Kids Are All Right Online

We’re not here to micromanage adults. Once you’re legal and got opposable thumbs and a credit card, go wild. But for kids and teens, the evidence is alarming.

Stanford Medicine’s psychiatry team flat-out warns that “friend” chatbots should not be used by children or teens, because the design invites dependency and can (and has, on multiple instances) slip into deeply inappropriate and graphic interactions. The study explains: “[the] blurring of the distinction between fantasy and reality is especially potent for young people because their brains haven’t fully matured. The prefrontal cortex, which is crucial for decision-making, impulse control, social cognition and emotional regulation, is still developing.”

Moreover, research mentioned in the article shows how the AI chatbot’s “always-on,” feature and continuous emotionally mirroring bots can reinforce isolation, miss or mishandle crises, and blur reality in ways that make professional help less likely, not more.

So, for instance, a teen or child already struggling with isolation interacting with this technology is likely to have their emotions amplified instead of resolved, developing an unhealthy attachment with the ever-present and ever-doting tool they went to for help. Reporting from The Conversation hammers this point home: among U.S. teens, 1 in 5 spent as much or more time with an AI companion as with real friends, suggesting a connection between usage and increased loneliness and dependence on these tools.

This is not hypothetical by the way; teens are already in the pool. A national survey by Common Sense Media found that nearly 3 in 4 U.S. teens have used AI companions, and about half use them regularly. Meanwhile, and Ofcom research study found that kids routinely bypass age checks. Namely, over a third of 8–15 year olds present themselves as 16+, and 1 in 5 children between the ages of 8 and 12 present as 18+ on at least one platform.

And if all this feels like pearl-clutching speculation, the unfortunate reality is that we already have cases where the worst possible scenario has become reality. California media have reported on lawsuits and investigations after two U.S. teens died by suicide allegedly following harmful exchanges with AI chatbots, one identified as Adam Raine (16, California) and another, Sewell Setzer (14, Florida).

404: Comprehensive Regulation Not Found

So, there must be something in place to ensure this doesn’t happen again, correct? Yes and no.

You see, regulation for AI systems, especially those made mainstream by Open AI’s Chatgpt, is relatively new, and certainly not equally binding. The ecosystems spans actors such as the EU who propose sweeping regulation like the EU AI Act, and the US, who recently minimized regulation to ensure national competitiveness. Heavily debated is the latest update to the UK Online Safety Act, which mandates that platforms containing material unsafe for children must verify the age of their users through government ID or face scans.

On the bright side, non-binding systems and more localized ones offer a general structure of what “acceptable governance” in this space would start to look like:

NIST AI RMF 1.0 Strongly encourages proactively mapping and controlling the potential risks caused by the technology (such as deceiving the user, or inappropriate behaviour with underage users).

ISO/IEC 42001 Among the many duties of AI systems, appointing clear roles, monitoring, and ensuring humans remain in the decisional loop (and that decisions are not offloaded to AI systems with no oversight) would be beneficial here.

ISO/IEC 23894 This, more geared towards risk management, could act as an anchor for discussions of boundary setting in chats (when does a conversation with an AI veer into inappropriate? How to de-escalate it early with minors?).

Heavily debated is the latest update to the UK Online Safety Act, which mandates that platforms containing material unsafe for children (such as porn) must verify the age of their users through government ID or face scans.

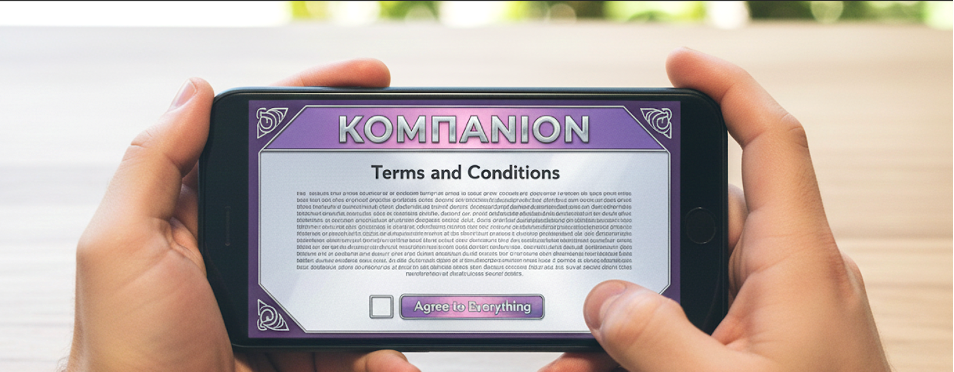

For context on how big the gap remains in practice, let’s look at an example. Grok’s (Twitter/ X’s in-built AI assistant) Terms of Service reveals a series of interesting choices:

On paper, they only allow 13–17 with parental permission but “warn that mature content may appear depending on features.”

The user data story is the same, on paper “you own your content.” But also, in the terms, there’s a forever clause that allows to use and keep your data for training.

You can ask for data removal, but it’s not very clear as to what that means in practice.

There’s no mention of test made to ensure the model does not instigate intimacy or grooming-like patterns, or induce compulsive use in vulnerable groups.

So, what now?

As we saw above, there’s a massive gap between theory and practice. Realistically, it is unlikely that most states will be willing to adopt any stringent regulation that might threaten innovation. That said, this is still very much an open discussion. As this technology becomes more widely-used, opportunities for responsible implementation grow. So here are some practical suggestions that could protect minors interacting with this tech without kneecapping providers:

No Verification No Companion

Privacy-preserving age checks should be required wherever an AI companion is offered (web or app). Not through national IDs (which would open a whole other but very worthwhile discussion on internet anonymity), but through third-party/tokenized checks or equivalent cryptographic attestations, meaning that the provider can check age without having to be disclosed the full ID of the user.

This also means that there’s a clear barrier that makes any sexual and NSFW feature unavailable to minors.

Friends Don’t Tell

Treat companion conversations as sensitive by default, so explicit, informed consent is required before collecting and processing sensitive data. No training by default (meaning that data is automatically collected unless user states otherwise) for logged-out sessions, and limiting the amount of time said data is kept for.

100% Session Safety

Require pre-market release, for models to prove they can handle interactions with minors (limits on intimacy escalation, session breaks if the user has been chatting for more than 2 hours straight, and night-mode reminders).

So, in practice, the model is trained to be capable of picking up on grooming-like dynamics and sexual role-play being initiated, and stops engaging. Same goes for crisis routing: when self-harm/abuse is detected, the system exits role-play, and offer real-world support and crisis centers links.

A Promising Sign?

If by now you’re feeling all sorts of AI-bad news fatigue, there’s some good news for a change. Regulators have started to notice and push back against the innovation-induced inertia that has left so much of this space unprotected.

On October 13, 2025, California became the first U.S. state to regulate AI companion chatbots. Starting January 1, 2026, operators of AI companion bots, from the heavyweights like OpenAI and Meta, to the specialty players like Replika and Character.AI, will be required to implement hard age verification, explicit disclaimers, and crisis response protocols. They’ll have to log and share self-harm escalation statistics with the state’s Department of Public Health, flag crisis-center notifications, prevent minors from accessing sexual content, and build in break reminders. Chatbots will be legally barred from posing as health professionals, and non-compliance can result in fines up to $250,000 per offense.

However, this law doesn’t fix everything. Seen from the outside, it’s a state-level guardrail in a federal vacuum with seemingly little political appetite for governance. Not to mention, it won’t magically solve enforcement nightmares around VPN use, fake ages, or cross-border platforms. But it’s the first real precedent: explicit legal accountability for companion bot operators. It signals to every other state (and eventually, federal regulators) that “it’s just a tool” won’t cut it anymore. And if California, with Silicon Valley breathing down its neck, can say kids’ safety isn’t for sale, others can follow.

Humans have always wanted their own version Galatea, but it’s always been the stuff of fantasy. So much so that having companies advertise her as already here, elicits, in the conversations I’ve seen, either incredulity (this is a joke, no one would take this seriously) or more disillusionment to the current state of the world (real life relationships are doomed). Those are two, albeit understandable, unhelpful extremes.

After all, the question isn’t whether she’ll stay, but how quickly and savvily we’ll get to draw those lines for her to follow. She is shiny and new and sometimes even mesmerizing, but that doesn’t make her, and especially not the people who made her, in any way wise.

So yeah, Galatea gets to stay, just don’t let her babysit your kids.

About the Author

Camilla Balbis is a trilingual AI governance strategist specializing in security, resilience, and responsible deployment. At AIGS Canada, she researches AGI-related national security risks across critical infrastructure, compute governance, and incident response. She brings over five years of experience translating complex, cross-sector challenges into market-ready solutions, working across startups, NGOs, universities, and UN-affiliated research initiatives. Camilla holds a Master’s degree from the University of Toronto’s Munk School, completed her BA in International Relations at the University of Oxford, and earned a diploma in cybersecurity.

References

UnHerd (2023) Replika users mourn the loss of their chatbot girlfriends, https://unherd.com/newsroom/replika-users-mourn-the-loss-of-their-chatbot-girlfriends

AI Tech Suite- Replika AI https://www.aitechsuite.com/tools/replika.com

Demandsage. (2025). Character AI Statistics (2025): Active Users & Revenue https://www.demandsage.com/character-ai-statistics

PLATFORMER (2025) Grok's new porn companion is rated for kids 12+ in the App Store https://www.platformer.news/grok-ani-app-store-rating-nsfw-avatar-apple

Sam Altman (2025) X https://x.com/sama/status/1978129344598827128

Common Sense Media (2025) Nearly 3 in 4 Teens Have Used AI Companions, New National Survey Find https://www.commonsensemedia.org/press-releases/nearly-3-in-4-teens-have-used-ai-companions-new-national-survey-finds

OFCOM (2024) A third of children have false social media age of 18+ https://www.ofcom.org.uk/online-safety/protecting-children/a-third-of-children-have-false-social-media-age-of-18

The Conversation. (2025). Teens are increasingly turning to AI companions — and it could be harming them. https://theconversation.com/teens-are-increasingly-turning-to-ai-companions-and-it-could-be-harming-them-261955

The Guardian (2025) Teen killed himself after ‘months of encouragement from ChatGPT’, lawsuit claims https://www.theguardian.com/technology/2025/aug/27/chatgpt-scrutiny-family-teen-killed-himself-sue-open-ai

NBC Washington (2025) Mom's lawsuit blames 14-year-old son's suicide on AI relationship https://www.nbcwashington.com/investigations/moms-lawsuit-blames-14-year-old-sons-suicide-on-ai-relationship/3967878

Stanford Medicine News (2025) Why AI companions and young people can make for a dangerous mix https://med.stanford.edu/news/insights/2025/08/ai-chatbots-kids-teens-artificial-intelligence.html

xAI. (2025). Terms of Service. https://x.ai/legal/terms-of-service

National Institute of Standards and Technology (2023) NIST AI 100-1 Artificial Intelligence Risk Management Framework (AI RMF 1.0) https://nvlpubs.nist.gov/nistpubs/ai/nist.ai.100-1.pdf

ISO/ IEC 2023 (2023) ISO 420012023 https://www.gsc-co.com/wp-content/uploads/2024/08/SCAN-ISO-420012023_-Web.pdf

ISO/IEC 23894:2023 (2023) Information technology — Artificial intelligence — Guidance on risk management https://www.iso.org/obp/ui#iso:std:iso-iec:23894:ed-1:v1:en:sec:6

TechCrunch (2025) California becomes first state to regulate AI companion chatbots https://techcrunch.com/2025/10/13/california-becomes-first-state-to-regulate-ai-companion-chatbots/?utm_source=substack&utm_medium=email